Introduction

The display of time-based media art requires at least three elements to be taken in consideration: the media itself, the display equipment and the display environment, including the display space and the way in which the work is encountered. An example from current practice at Tate is to create an mp4 file to be played back on a BrightSign player1 and projected using a video projector. In order to ensure that a video file is displayed correctly in the gallery it is essential to consider the files and their specifications, but also the specifications in the playback and display equipment. Assessments of such files and the images they contain may be carried out with consideration of different technical environments and conditions and at different moments in time, such as when it enters the Tate collection, either supplied by the artist or as the result of tape digitisation. The accurate interaction of digital video files with display software and hardware depends on the clarity and alignment of technical specifications which may be either expressed, inferred or presumed. This paper reviews compatibility and adherence issues pertaining to the display of video files.

Adherence and Assessment

The assessment of many forms of time-based media is accomplished through two distinct and disparate experiences. The presentation or performance of time-based media may be assessed by sensing and evaluating the images, sounds and experience created by the work. Distinctly, the work may also be evaluated through examination of the audiovisual recordings, code and materials used. In many cases the experience of a time-based work only includes the sensory input of the performance and no direct interaction with the objects, such as when an audience is looking at a screen and listening to speakers but having no direct experiences with the form of the objects used to create that experience. Alternatively a conservator may examine a film print, videotape or source code but not have access to the hardware, software or tools required to render the intended performance from those materials.

The audiovisual objects such as audiovisual files, videotape or film contain a variety of format-specific characteristics that impact how they should be presented; if such characteristics are expressed or interpreted improperly the entire presentation could be compromised. Additionally, some formats are particularly vague or tacit about certain significant characteristics; for example a videotape may not give any indication on how the audio channels should be presented or a video files may not clearly state its aspect ratio or color space.

The hardware, software and tools to present complex media are accompanied by layers of expectations and requirements, expressed in technical writing or unspoken and assumed. Minor deviations in the form or description of a complex media object can have a substantial impact on whether that presentation can be generated properly or at all. Assessing complex media against presentation or conservation requirements requires an analysis beyond the exterior form. For instance a VHS player may be able to play VHS tapes recorded in NTSC but not in PAL or be able to play tapes recorded in SP or LP mode but not SLP (SLP requires a 4-head VHS player). Or a video player may support h264 encodings up to level 4.2 but not above or support files with an ‘.mpg’ extension but not an ‘.mpeg’ extension. The determination of what hardware, software or environment is required to successfully render an intended presentation can require a detailed and structural understanding of the formats involved.

However, this understanding is complicated by the fact that audiovisual formats use differing terminology to express overlapping concepts. Audiovisual formats may contain contradictory information about their own characteristics or leave this information unclear, potentially creating interoperability issues. For example, a file consisting of a QuickTime wrapper and a DV stream may have metadata indicating that the stream is to be displayed as 16:9 aspect ratio, while the metadata in the QuickTime wrapper indicates a 4:3 ratio.2

Some metadata tools exist (such as MediaInfo, FFmpeg, exiftool) that will describe and summarize the significant characteristics of an audiovisual file so that we may answer questions such as ‘Does this video use a 16:9 aspect ratio?’ or ‘Does this use h264?’; however, answering questions such as ‘Is this sufficiently self-descriptive?’ or ‘Is this unnecessarily complicated?’ or even ‘Why doesn’t this file work with this software?’ require delving deeper. Additionally, such tools generally do not assert why it comes to a particular conclusion; for instance, is the tool reporting that the aspect ratio is 16:9 because of a clearly expressed ratio in a QuickTime ‘pasp’ (pixel aspect ratio) element or is it inferred by only using the ratio of frame’s width to height.

Throughout conservation and access workflows, there are regular expressions of technical expectations or policies, requirements that must be met for a given file to work in a given player, the manual with a projector, or technical requirements that may be included within the acquisition of an object. However, there is a gap between defining or interpreting such requirements and assessing a file’s adherence to it. For example, the specifications of a projector cannot be directly connected to a digital file for a yes or no answer, but some other tool or specialist needs to interpret both the specifications and the properties of the file to determine adherence. Within this particular step of media assessment there is opportunity for more standardisation and automation.

There is precedent for efficient machine-readable expression of policy and evaluation tools in other areas. For examples, the requirements for an XML Document may be expressed within an XML Schema. An XML validator can be used to apply the XML Schema to the XML Document and get a simple yes or no in regards to if the XML Document is valid or a description of the issues if not. Currently, to accomplish the same effort with complex media requires the conservator to perform the role of the validator through assessment and experimentation. In the next section we address the possibility of making this validation less time consuming, through the application of machine-readable policies.

Policies

While the main focus of this paper is on policies determined by specific technical environments, other policies governing an audio visual artwork might be determined by parameters specified by the artist and by conservation practice and ethics. During the acquisition process a conservator may recommend particular formats or characteristics. The conservation of the same materials may use different requirements as the collection reacts to obsolescence issues and updates technical policies. Again when materials are exhibited, the hardware, software or tools used to support the exhibition often bring their own form of requirements as the exhibited material must adhere to the technical forms that the exhibition environment can support. Often the requirements of various exhibition tools, display tools and conservation requirements may not all be satisfied by the same formats, requiring the use of several renditions of the same content for different purposes. All of these requirements throughout the conservation process can be seen as policies. Policies can exist in the clearly defined technical requirements of exhibition hardware that specify what forms of media are supported, they may be documented preservation policies or recognised as current conservation practice, or as requirements for the work specified by the artist. For example, an artist may require that the exhibition of an audiovisual form occur in the original format and thus necessitate the procurement of custom software or hardware to support that intent.

A straightforward use of policies is in checking files resulting from migrating from tape to file-based video through digitization. In this process a large number of files may be produced in a short period of time, and it is essential that their characteristics are consistent and expected. In this case the use of a policy defining those characteristics can make the process faster and clearer.

Expressing and evaluating media collections against defined policies and using the outcome of policy tests to inform decision making can help to establish better control over collections and increased utilization of materials. However, the technical properties of complex media works are often challenging to assess as an understanding of those properties requires specialized tools. Some display hardware may have requirements such as an mpeg2 stream with two b-frames per one i-frame or a constraint that a QuickTime header may not be compressed; to determine adherence to such policies requires technical information beyond what the ‘info window’ of most playback software provides. Additionally the policies written by exhibition hardware to define supported media, those written by playback software and those conceived by a conservator may use conflicting terminology and expressions.

This paper will look specifically at three categories of policies:

- Compatibility requirements (for media with hardware/software)

- Adherence to technical profiles (such as TN2162 for Uncompressed video in QuickTime)

- Comparison of files (to determine how alike or different a set of files may be)

MediaConch

In 2014, PreForma, a project co-funded by the European Commission under its FP7-ICT Programme, began work to develop three conformance checkers for a select list of file formats. One of the goals of the PreForma project was to design methods and tools to validate file formats against expressed policy. The audiovisual project of PreForma, MediaConch3 , developed a policy expression format called MediaPolicy4 and a method to validate media formats against it in MediaConch5 .

MediaPolicy provides a structure to depict a policy based upon the language of MediaInfo (which provides a summarization of significant digital media characteristics) and MediaTrace (which provides a method for expressing the entire architecture of a digital media file).

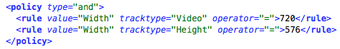

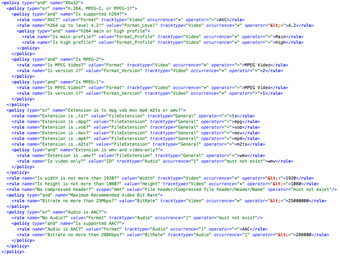

A simple policy to say that the video track’s frame width must be 720 and width must be 576 could be expressed like this:

A more complex policy6 to say that the video must use width of 720 with either a frame rate of 25 fps with a height of 576 or a frame rate of 29.970 and a height of 486 is expressed like this:

Using such expression allows for technical audiovisual policies to be expressed in a common format.

Compatibility Requirements and BrightSign

The exhibition of complex media is dependent on hardware, software and tools, each with particular requirements for what it can support. A film projector will generally only support transporting a single film format, but the lens and audio readers will also limit what types of film can be properly presented. As a 35mm projector with limited lens may not support proper presentation of a Cinemascope print, computer software is also limited in what audiovisual features it can support. For example, software may only support certain combinations of containers and encodings and only within certain parameters.

For example, the BrightSign XDx32 player offers these restrictions on playback support7 :

- Supported Codecs: H.264, MPEG-2, MPEG-1

- Resolution: The maximum supported resolution is 1920x1080. Note that some supported VESA video modes have limitations.

- Supported Extensions/Containers: .ts, .mpg, .vob, .mov, .mp4, .m2ts

- Note: .mov files with compressed ‘atoms’ (i.e. metadata) are not currently supported.

- Additionally Supported Extensions: .wmv files that are exported from PowerPoint (i.e. video-only .wmv without .wma audio).

- Specs for H.264 Extensions/Containers: Support for Main and High Profiles up to level 4.2

- Specs for MPEG-1 Extensions/Containers: System streams only (elementary streams are not supported)

- Maximum Recommended Video Bit Rate: 25Mbps

- Audio Support: AAC audio (CBR/VBR) up to 288Kbps

To determine if a media file adheres to these requirements requires quite a bit of research, including knowledge of the:

- Video codec used

- Frame size of the video

- The extension of the file

- If it is mov, then whether the file using compressed atoms

- If it is wmv then is there not audio

- If it uses h264 video, then the profile and level are needed

- The bitrate of the video

- The bitrate of the audio

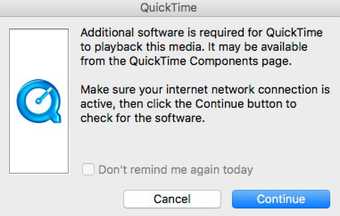

Much of this detail is easily found with accessible metadata tools for media such as mediainfo, exiftool, or ffmpeg. However only a few of these tools report on h264 profiles, h264 levels, or whether the file uses compressed atoms, a feature not currently supported by the BrightSign XDx32 player. Compressed atoms are a feature used in QuickTime when it is important to minimize the size of the file by compressing the file header as well. This adds a ‘cmov’ atom within the ‘moov’ atom to contain the compressed header and is expressed by MediaInfo as ‘Format settings: Compressed header’.

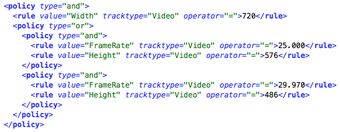

In the example below ‘presentation123.mp4’ uses a compressed header. This means that the data of the ‘moov’ atom which contains significant properties of the file is itself compressed and stored within a ‘cmov’ atom. This is allowed by the QuickTime specification in order to further reduce storage space, but the added complexity of this approach is not supported by some playback tools, such as this BrightSign player. When applying the BrightSign policy to a file with MediaConch the output can express deviation from the policy if any, such as:

Such an analysis allows a conservator to assess a video file against a policy expression to determine if the file will be supported by the player; if the file is not supported, this analysis will provide an indication as to what must be changed in order to gain support.

Uncompressed Video

TN2162

Best practices are often shared and refined to define a particular set of characteristics and technologies for a given purpose. Such practices are used particularly when media is digitised or exchanged in order to express expectations. Technical profiles are used not to align media with the support of any system in particular, but they are used and promoted as an agreement in the anticipation that systems and users will share support for a common practice or to promote community around collaborative technical decision making.

In audiovisual archiving, there are a few preferred file formats amongst the community including jpeg2000 in MXF, ffv1 in Matroska and uncompressed video in QuickTime. The QuickTime container is related to the ISO/IEC 14496-14 standard and widely supported by open-source and proprietary media applications in a variety of computer platforms. Uncompressed video requires a lot of space and time to work with but uses simpler technologies; however, uncompressed video in QuickTime has many risks because an uncompressed video encoding is not at all self-descriptive and must rely on the container to express all of its characteristics (such as frame size, color space, whether broadcast range or full range, sample ordering etc.).

In response to the interoperability and description challenges that may accompany uncompressed video in QuickTime, Apple has published ‘Technical Note TN2162: Uncompressed Y´CbCr Video in QuickTime Files’. The document outlines many of the objectives of this technical profile, familiar to the challenges of maintaining presentation consistency for media objects, such as:

Using these labels, application and device developers can finally relieve end users of the following nagging usability problems:

- ‘I captured this file on a Mac but it looks dark on a PC.’

- ‘I rendered to this file in app A; it plays ok in MoviePlayer but looks like snow in app B.’

- ‘I captured this data and the colors look wrong on my computer screen.’

- ‘I captured this data but the fields are swapped when I play it back. My application has 6 different field-related controls and I’ve tried all 64 combinations but none of them work.’

- ‘I captured and played this data OK, but whenever I try to render or do effects using this data, there are stuttery forward-backward motion problems.’

- ‘I captured this data and it looks squished or stretched horizontally.’

- ‘Circles I lay down in my application don’t look circular on the video monitor.’

- ‘I am compositing similar video footage which I had captured with two different devices, and the video data is shifted horizontally. No setting of captured image size seems to help.’8

The document defines a variety of additional requirements for uncompressed QuickTime files such as the expression of pixel aspect ratio, interlacement, color space and aperture. Such metadata may be optional in QuickTime when other more self-descriptive encodings are used, but as the Technical Note demonstrates, these values are more explicitly necessary for uncompressed video in QuickTime.

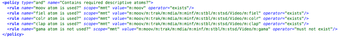

Thus a policy for uncompressed video may be more effectively communicated by saying that the media must be compliant with TN2162 rather than simply requested as ‘uncompressed video in QuickTime’. However, determining compliance with TN2162 is a challenge with the present outputs of MediaInfo, exiftool and other metadata tools. This is partly because such tools will strive to output certain key characteristics despite how they are known. MediaInfo may say that a video has a display aspect ratio of 4:3 because this is stored explicitly by the pasp atom (as described in TN2162) or because this information is not clarified within the file so as a last resort MediaInfo expresses display aspect ratio as only a ratio of the width and the height of the frame. In other words, MediaInfo makes efforts to express significant characteristics of media, but does not necessarily infer if that information is based on a presumption or an explicit value of the media. Because of this, a policy to test a QuickTime file for adherence with TN2162 is mostly based upon reporting from the file’s structure via MediaTrace rather than the summarization provided by MediaInfo. For instance, a policy for TN2162 should test for the existence of certain QuickTime atoms and also test their contents:

A full MediaPolicy expression for TN2162 may be found in the MediaConch repository on GitHub and Apple’s documentation on TN2162 may be found at developer.apple.com.

Although Apple’s Technical Note details the importance for descriptive standards in particular for uncompressed video in QuickTime, its media applications do not always adhere to such a recommendation. For instance a ProRes file may contain interlacement data, color metadata and aperture information. If that file is opened in QuickTime Pro 7 and exported to uncompressed video in QuickTime then the contextual information on interlacement, color and aperture is lost. In addition to the presentation of the uncompressed output being distorted by the loss of context, the file is no longer compliant with Apple’s TN2162 recommendations. Without such information in the uncompressed file, it is likely to run into the same scenarios expressed in TN2162 such as ‘I captured this data and the colors look wrong on my computer screen.’

Uncompressed v. Compatibility

Although interoperability is often given as an advantage for uncompressed video in QuickTime, this is only true with a small subset of uncompressed video. Uncompressed video can vary by color space (gray, YCbCr or RGB), bit depth (1, 8, 10, 16 or other), chroma subsampling (4:2:0, 4:2:2, 4:4:4 or others), sample arrangement (UYVY v. YUYV), chroma signedness (YVYU v. yuvu). QuickTime Pro 7 can encode uncompressed YCbCr video only if it is 4:2:2 at either 8 or 10 bit (2vuy and v210). QuickTime Pro 7 can also play those files and also play uncompressed grayscale at 16 bit (b16g). However, QuickTime Pro 7 cannot play most other forms of uncompressed video including uncompressed video at 4:2:0 (one of the most common chroma subsampling patterns).

Uncompressed video formats can differ in how signednessis used9 . The majority of formats store chroma values as unsigned values representing an 8 bit range of 0 through 255. However a few uncompressed formats, such as yuvu and yuv2, use signed values that express a range of −128 to 127. If an uncompressed video with signed chroma values is interpreted as unsigned, the color values will be significantly off10 .

Such a file could be converted to a more typical ‘2vuy’ uncompressed encoding in QuickTime to decrease interoperability issues.

Testing Uncompressed QuickTime Collections

As part of the research carried out at Tate a TN2162 MediaPolicy was created in order to test against a collection of thirteen uncompressed QuickTime files. Unfortunately, none of the files passed this TN2162 test.

The most common error was related to a requirement in TN2162’s section on ‘ImageDescription Structures and Image Buffers’, which lists how values of the Image Description section of a video track should be set. This includes a requirement that the version of the Image Description be set to ‘2’. None of the thirteen samples used a version of ‘2’, but instead used a mix of ‘0’ and ‘1’. The versions of the Image Description vary because over time Apple would add further contextual data to the Image Description to accommodate more modern types of data. TN2162 provides an appendix on how to handle the backward compatibility of versions 0 and 1, but only applies this section to 8 bit uncompressed video (either 2vuy or yuv2) so the meaning of 10 bit video (which covers most of the samples tested) is not clear in this context. The appendix notes that in this case ‘in general it is not possible to derive all the parameters’ and provides a list of best assumptions that could be made, but ultimately such a file may be at risk of interoperability issues as it depends on adherence to presumptions rather than clear self-description.

Other files were missing the ‘colr’ atom which describes color space details such as transfer characteristics, color space and matrix coefficients. TN2162 requires the ‘colr’ atom as otherwise the interpretation of color will be presumptuous. Of the thirteen files tested, ten contained a ‘colr’ atom.

Similarly TN2162 requires a ‘fiel’ atom to define the interlacement properties of the file. Four of the thirteen files did not contain this information, so the handling of interlacement would have to be presumed.

TN2162 requires the ‘pasp’ atom, which documents aspect ratio information, only if the video should use non-square pixels. This is an unfortunate decision of the specification as the lack of the ‘pasp’ atom infers that the pixels are square, when very often this is not a correct presumption. Fortunately, only one of the thirteen samples tested did not include a ‘pasp’ atom. This file happens to use a frame size of 720x486 and since it is an uncompressed QuickTime video with a ‘pasp’ atom, the display aspect ratio would be interpreted as 720:486, which could be simplified to 40:27 (which presents the image as stretched a bit as it is intended to be shown at 4:3). According to TN2162 it is acceptable for an uncompressed QuickTime file to not use ‘pasp’, but it is important to include this requirement in an adherence policy for uncompressed video, as the lack of a ‘pasp’ atom results in the incorrect presentation of the file.

The file referenced in the prior paragraph also happens to use a ‘gama’ atom, when TN2162 mandates that this atom should not be used for uncompressed video in QuickTime. Here the `gama` atom provides a gamma level that can be used to adjust the image, but this contradicts the information of the ‘colr’ atom. TN2162 instructs that ‘readers of QuickTime files should ignore “gama” if “colr” is present’ and explicitly forbids QuickTime writers from using both ‘gama’ and ‘colr’ simultaneously as this file does.

The conclusions from these tests demonstrate that although Apple provides a specification for the use of uncompressed video in QuickTime, many files do not follow these rules. While TN2162 aims to address many of the interoperability issues that can impact uncompressed video, the impact of TN2162 has been inconsistent and interoperability issues and a lack of clarity in technical characteristics persist with uncompressed video in QuickTime.

These conclusions do not intend to specifically draw concern to uncompressed video in QuickTime relative to other common audiovisual preservation selections such as jpeg2000 in MXF or FFV1 in Matroska. Other formats are similarly vulnerable to poor and inconsistent implementation, misinterpretation or a lack of refinement in application. While these options all build upon very flexible technologies, there is certainly opportunity to refine these implementations with greater specificity for application towards conservation or preservation objectives by refining shared policies within user communities.

Conclusions

Although hardware/software vendors, conservators and systems may all define policies pertaining to the significant characteristics of audiovisual media, the application of these policies has often been too informal or too manual to be properly effective. The forums for software such as Adobe Premiere or hardware such as BrightSign players are filled with technical support conversations regarding how files do not work as expected, followed by requests for more details and guesses at what may be wrong. In audiovisual media work, the standardisation of policy language and methods to test files against policies would support more consistent and sustainable playback of media. Media hardware that is accompanied by technical media requirements documentation could be translated into standardised machine-readable policies which software could use to assess large collections for compatibility. Similarly, the adoption of ‘best practices’ by audiovisual communities, such as the use of uncompressed video in QuickTime for digitization from videotape or normalization of video, could benefit from the standardisation of policy expression. This would make it more apparent to those involved in caring for the files the extent to which these files conform to realising the intentions behind these practices.